What is Inductive Bias?

Inductive bias can be defined as the set of assumptions or biases that a learning algorithm employs to make predictions on unseen data based on its training data. These assumptions are inherent in the algorithm's design and serve as a foundation for learning and generalization.

The inductive bias of an algorithm influences how it selects a hypothesis (a possible explanation or model) from the hypothesis space (the set of all possible hypotheses) that best fits the training data. It helps the algorithm navigate the trade-off between fitting the training data perfectly (overfitting) and generalizing well to unseen data (underfitting).

Types of Inductive Bias

Inductive bias can manifest in various forms, depending on the algorithm and its underlying assumptions. Some common types of inductive bias include:

- Bias towards simpler explanations: Many machine learning algorithms, such as decision trees and linear models, have a bias towards simpler hypotheses. They prefer explanations that are more parsimonious and less complex, as these are often more likely to generalize well to unseen data.

- Bias towards smoother functions: Algorithms like kernel methods or Gaussian processes have a bias towards smoother functions. They assume that neighboring points in the input space should have similar outputs, leading to smooth decision boundaries.

- Bias towards specific types of functions: Neural networks, for example, have a bias towards learning complex, nonlinear functions. This bias allows them to capture intricate patterns in the data but can also lead to overfitting if not regularized properly.

- Bias towards sparsity: Some algorithms, like Lasso regression, have a bias towards sparsity. They prefer solutions where only a few features are relevant, which can improve interpretability and generalization.

Importance of Inductive Bias

Inductive bias is crucial in machine learning as it helps algorithms generalize from limited training data to unseen data. Without a well-defined inductive bias, algorithms may struggle to make accurate predictions or may overfit the training data, leading to poor performance on new data.

Understanding the inductive bias of an algorithm is essential for model selection, as different biases may be more suitable for different types of data or tasks. It also provides insights into how the algorithm is learning and what assumptions it is making about the data, which can aid in interpreting its predictions and results.

Challenges and Considerations

While inductive bias is essential for learning, it can also introduce limitations and challenges. Biases that are too strong or inappropriate for the data can lead to poor generalization or biased predictions. Balancing bias with variance (the variability of predictions) is a key challenge in machine learning, requiring careful tuning and model selection.

Additionally, the choice of inductive bias can impact the interpretability of the model. Simpler biases may lead to more interpretable models, while more complex biases may sacrifice interpretability for improved performance.

Inductive Bias in

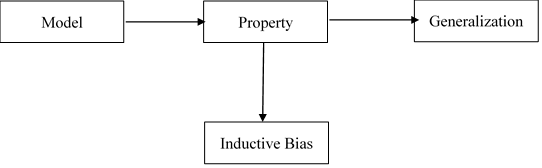

Before learning a model given a data and a learning algorithm, there are a few assumptions a learner makes about the algorithm. These assumptions are called the inductive bias. It is like the property of the algorithm.

For eg. in the case of decision trees, the depth of the tress is the inductive bias. If the depth of the tree is too low, then there is too much generalisation in the model. Similarly, if the depth of the tree is too much, there is too less generalisation and while testing the model on a new example, we might reach a particular example used to train the model. This may give us incorrect results.

Inductive bias in decision tree learning refers to the set of assumptions or preferences that guide the learning algorithm in selecting a particular hypothesis (decision tree) from the space of all possible hypotheses. Here are some key aspects of inductive bias in decision tree learning:

1. **Tree Structure Preference**: Decision tree algorithms often have a bias towards simpler tree structures (shorter trees with fewer branches) because they are considered more likely to generalize well to unseen data. This bias helps in avoiding overfitting, where the model fits the training data too closely and fails to generalize.

2. **Attribute Selection Bias**: Algorithms like ID3 (Iterative Dichotomiser 3) and its variants use specific heuristics (e.g., information gain, gain ratio) to select attributes that are most informative for splitting nodes in the tree. This bias towards certain attributes impacts the shape and depth of the resulting decision tree.

3. **Handling Missing Values**: Some decision tree algorithms handle missing attribute values by selecting splits that minimize information loss or by assigning probabilities to missing values based on the distribution of known values. This handling represents another form of inductive bias.

4. **Bias towards Majority Class**: In classification tasks, decision tree algorithms may exhibit bias towards predicting the majority class if the data is imbalanced, unless specific measures (like class weights or sampling techniques) are implemented to mitigate this bias.

Understanding the inductive bias of a decision tree algorithm is crucial because it influences the model's learning process, the complexity of the resulting tree, and its ability to generalize to unseen data effectively.

No comments:

Post a Comment